UAS data that is gathered conscientiously can provide much more insight than what is seen at face value. Depending on the skills of the operator and analyst there can be a surprisingly copious amount of value that can be extracted from a UAS data set. This lab activity demonstrates this by providing instructions regarding how to classify aerial images in order to determine their surface types. Only the first two steps of this tutorial are necessary to complete for the lab activity. The instruction for this lab activity was originally a tutorial created by ESRI, and the full set of instructions can be found here:

https://learn.arcgis.com/en/projects/calculate-impervious-surfaces-from-spectral-imagery/

In this lab activity ArcGIS pro is used in order to classify surfaces as pervious or impervious. Pervious surfaces are those which allow water to pass through; whereas impervious surfaces do not allow water or liquid to pass through. Some examples of pervious surfaces related to this lab include bare Earth, grass, and water. Some examples of impervious surfaces related to this lab include roofs, driveways, and roads.

So, why would it be beneficial for one to be able to classify surfaces in this way? The tutorial actually provides a very clear example as to why this method of classifying surfaces is useful. Apparently many governments charge landowners that possess substantial amounts of impervious surfaces on their property. So, this method of classification could therefore be used in order to calculate these fees per parcel. In this situation a parcel can be defined as a specific plot of land. Examples of these parcels can be found below.

Methods:

Part 1: Segmenting the Imagery:

Before the imagery is classified it is necessary to adjust the band combination in order to see the different features with ease. In regards to aerial imagery a "band" is a layer within a raster data set.

(Figure 1: original map with parcels)

Figure 1 above shows what the map looks like when it is originally imported into ArcPro. The area shown is a neighborhood in Louisville Kentucky. This is one of the areas that is known to charge fees for impervious surfaces. The yellow lines represent parcels or individual land lots.

(Figure 2: Bands)

Figure 2 above shows the different band layers within the raster data set.

(Figure 3: Extract Bands Function)

Figure 3 shows the raster function that we will be using. In this case we will be using the "extract bands" function. Raster functions are used to apply an operation to an image "on the fly" this means that the original data remains unchanged. It also means that a new data set is not created. The output of this function takes the form of a layer that only exists within a specific project. The "extract bands" function is used in this case in order to create a new image with only three bands that will be used to tell the difference between pervious and impervious surfaces.

(Figure 4: Extract Bands Parameters)

Figure 4 displays the parameters for the extract bands function. Band IDs is used as the method of identifying the bands because it is the most simplistic. Band ID's uses a single digit number in order to label each band. In this function we will be using band 4, band 1, and band 3. Band 4 is "near infrared" which focuses on vegetation. Band 1 is red and it focuses on vegetation as well as human-made objects.

(Figure 5: Extract Bands Layer with Parcels)

(Figure 6: Extract Bands Layer with no Parcels)

After the extract bands function is run the layer created should look like Figures 5 and 6 shown above. The only difference between the two images is that the parcel layer is not visible in Figure 6. Within these images vegetation is red, roads are gray, and roofs tend to be displayed as gray or blue. The next step in the process involves using the classification wizard.

(Figure 7: Image Classification Configuration)

(Figure 8: Setting Output Location)

Figures 7 and 8 shows the adjustment of parameters within the image classification wizard. The classification shcema is actually found by using the option "use default schema" within the drop down menu. The output location also needs to be changed to the "neighborhood data". The next step in this process is to segment the image.

(Figure 8: Segmented Image Preview)

In order to segment the image a few adjustments must be made to the parameters spectral detail, spatial detail, and minimum segment size in pixels.

Spectral detail is set to 8, spatial detail is set to 2, and minimum segment size is set to 20. If this Spectral detail determines the level of importance assigned to spectral differences between pixels from a scale of 1 to 20. If this value is high it means that pixels have to be more similar in order to get grouped with each other. This also creates a higher number of segments. Since impervious and pervious surfaces have very different spectral signatures a low value is used in this scenario.

Spatial detail determines the level of importance assigned to the proximity between pixels again, on a scale of 1 to 20. A high value on this scale indicates that pixels must be closer to each other in order to be grouped together.

Segment size is not determined on a scale of 1 to 20. Segments with less pixels will be merged with neighboring segments. In this situation you don't want the segment size to be unreasonably small, but you also don't want to have impervious and pervious segments merged together. Once these settings are adjusted appropriately the map should look like figure 8 shown above.

(Figure 9: first training sample)

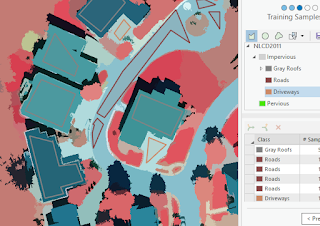

The next step in this process is to create training samples so that the imagery can be further classified into specific pervious and impervious surfaces. This is done by first creating two primary classes; impervious and pervious. Within these two categories there will be more specific individual classes. Impervious classes were created for roofs, roads, and driveways. Pervious classes were created for bare Earth, grass, water and shadows.

Once these sub classes are created training samples are created by drawing polygons on these objects so that the software can learn to identify them. Once the polygons are created for a single class they must be collapsed together. Figure 9 shows the first training sample created. Figure 10 displays more training samples for identifying gray roofs. Figure 11 shows several different types of training samples.

(Figure 10: More roof training samples)

(Figure 11: Different types of training samples)

The final step in this process is classifying the image. In order to do this the classifier tool must be set to the "support vector machine" and the maximum number of samples per class must be set to zero. This second step ensures that all of the training samples are used.

Once these parameters are adjusted the classification is ran and the resulting image should look like figure 12 below. The image below actually has a couple of errors in classification. A good example of this would be the pond in the lower left corner. These anomalies can be reclassified manually using polygons.

(Figure 12)